Keeping track of the latest audio and video tech can be daunting. Thankfully, High-Def Digest has you covered. Welcome to our new Home Theater 101 series, where we'll be explaining emerging technologies while also recommending the very best possible A/V gadgets n' gear you can buy at your particular budget level...

Before a mob forms and screams for my head, please let me apologize for the provocative title and clear up a couple things. I'm not about to argue against HDR (high-dynamic range) content and neither am I arguing for its demise.

In fact, I want to champion HDR content and hope for its success. There is much to love and appreciate in the pursuit of better contrast, richer blacks and improved, wider color reproduction, especially as someone who wants to recreate a true theatrical experience at home.

The purpose of this article is to share specific grievances and frustrations with the implementation of HDR10 across Ultra HD Blu-ray and UHD streaming, and explain why Dolby Vision is superior and should eventually win this current battle for my wallet. So, without further ado, welcome to

We've already covered the basics about What is HDR? and What is Dolby Vision? in previous Home Theater 101 articles. If you need a refresher, please click on those article titles above.

As any long-time home theater enthusiast knows, the introduction of new toys rarely goes smoothly or without a hitch. Those of us infected with the ridiculous obsession of upgrading to the latest and greatest (the terminal and incurable condition affectionately referred to as "upgraditis") are usually the guinea pigs for manufacturers promising better quality in some small area or another. And sometimes, it's difficult to remain objective, to criticize or admit the faults and drawbacks of the recent addition to the setup, in light of the amount of money spent to own the new, shiny toy.

When Blu-ray was officially introduced to the market in 2006, it was fraught with a variety of issues and a mostly terrible selection of titles mixed with a few good ones. A bigger problem still was the awful picture quality of every single one of those movies, mainly due to the fact that studios simply recycled outdated DVD masters.

Today, we're faced with a similar problem with many of the 4K Ultra HD (UHD) titles being released based on upconverted 2K digital intermediates (DI). The outcome isn't always bad, but the process can introduce some unwanted artifacts, such as aliasing, an unnatural grain structure or just poor resolution quality. This is precisely the problem with recent releases 3:10 to Yuma (2007), xXx: The Return of Xander Cage and the first Iron Man movie, which was released in Germany.

To be fair, sharper image quality is only one small advantage of UHD discs. The reason for the format's existence is really on the implementation of HDR and a wider color gamut (WCG), capable of at least 90% of the DCI-P3 (Digital Cinema Initiative P3) color space used in cinemas today. But this is where we encounter one of our first problems because sadly, the quality of those features is dependent on the television set. While it's worth mentioning consumers are not always buying true 4K material, a bigger issue with the HDR mastering process introducing other possible faults in the format is related to the capabilities of individual TVs.

In other words, while there is an agreed-upon standard for HDR created by the Ultra HD Forum, there isn't exactly a specified guideline for TV makers to abide by. Ultimately, this means that the 4K content being displayed can be handled differently from one manufacturer to the next.

So, what are these standards and how can this create an issue between the many TV sets?

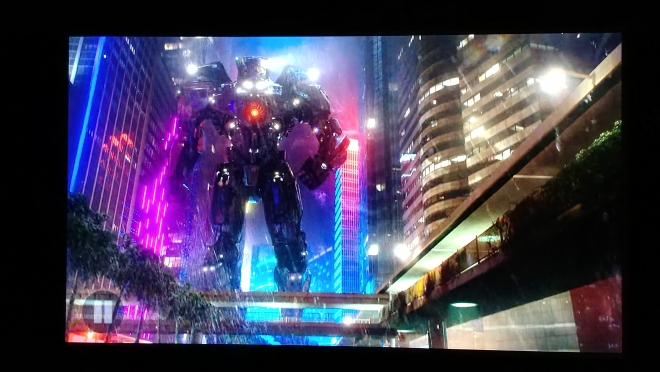

Picture taken on high-def camera with HDR, but not an accurate representation of the actual content

According to the Forum, HDR content can be mastered up to 4,000 "nits" of peak brightness, though it currently maxes at 1,000 nits, in 10-bit color depth. But most sets on the market right now range between 500 and 700 nits. The higher end Sonys and Samsungs have tested a tad brighter, and LG promises their more expensive line of OLEDs are capable of over 1,000 nits. I'm pointing out these differences because the question then becomes what happens to the extra missing nits. Well, that is decided by the manufacturer, which could either lead to better detailing in the specular highlights or a loss of them depending on the TV model purchased.

When comparing the same still image, a Samsung TV can look brighter, but it can also wash out the finer details in the whitest whites. Meanwhile, a higher-end Vizio will seem darker, but whites will remain brilliant while maintaining clarity of those tiny, fine lines. The same goes for very dark scenes where shadows may crush small background information in one TV, but not as bad in another. The same movie on UHD can actually look better and brighter in one TV versus another. This, too, introduces another obstacle when it comes to calibration. When trying to adjust contrast, some TVs will achieve 1,000 nits with little issue, but other sets could potentially start blooming before even coming close. The same goes for adjusting brightness levels where the goal is to hit below 0.05 nits, but there is little guarantee that new TV can achieve that level before crushing. With manufacturers deciding the peak brightness of each set and depending on the model, there is potential for lots of discrepancy and disagreement in PQ between viewers.

On top of that, HDR10 is an open-source format (no licensing fees for using it or advertising it), which allows manufacturers and studios to use it as they see fit without any consensus on how the content should be displayed. HDR10 is also a static mastering process that clicks on at the start of the movie, like someone simply flicking a switch and calling it good. The tone and gamut mapping techniques don't change or adjust depending on the scene or according to the stylized cinematography. Everything is dialed to specific peak targets, and they stay that way from start to finish with less consideration as to whether or not it was the filmmakers' intention or looks appropriate to the scene.

On the other hand, Dolby Laboratories aims higher and with the future capabilities of television in mind with their HDR standard and mastering process. Dolby Vision is a proprietary format, meaning manufacturers pay a licensing fee because everyone involved must meet the same, universal standard. You can read the great article by Steve Cohen linked above for a breakdown of those standards. But for our purposes, I'll only mention that, with the promise of 68 billion colors, primaries already appear fuller and more true to life in the current slate of televisions while displaying a richer, more varied array of secondary hues. Much of the format's already noticeable superiority is due to a tone and gamut mapping process that's far more dynamic and active, meaning peak targets are adjusted scene by scene or frame by frame.

By being a proprietary format, manufacturers must calibrate picture settings to Dolby's requirements. The drawback to this is that it limits the calibration efforts of the end user. That's not to say that owner's of their new, shiny 4K HDR display can't make alterations, but rather, the adjustments shouldn't be that far off from the prescribed settings out of the box. This would explain why we have yet to see Dolby Vision available on projectors, and we might not for several more years. The technicians at Dolby have a set goal in mind of how the picture should look. TVs can be made with those specific settings because they are not quite as sensitive as a projector, which is dependent on the condition of the room and must be adjusted accordingly. I don't have any official word from Dolby as to why Dolby Vision has yet to be included in projectors, but I suspect those technicians take into consideration that not all PJ owners watch in very dark rooms or environments where the lighting is controlled.

Picture taken on high-def camera with HDR, but not an accurate representation of the actual content

Granted, the strength of Dolby Vision all sounds good and fantastic on paper, but if most current televisions can't even reach 1,000 nits and max at 10-bit color depth, including 2017 models, then what does it matter? The point is that as excited as I am of HDR10 — and I am, owning every single UHD disc released thus far, including some rather questionable titles and a few imported from other parts of the world — I am even more excited for Dolby Vision. The standard is the future of home theater with the promise of being a better representation of a filmmaker's vision. And already, we can see the benefits and differences, especially now with three UHD titles featuring Dolby Vision HDR with plenty more coming in the next few months.

Admittedly, the differences are very subtle, minor and arguably negligible for some, but they are nonetheless notable improvements giving us a glimpse of what is possible. On the more obvious and immediate side of things, there are CG animated films like The LEGO Movie and The LEGO Batman Movie available on VUDU and can be had using the digital copy codes in the UHD package. In both cases, viewers can enjoy a significantly richer and far more energetic color palette than the HDR10, which are themselves a gorgeous presentation. The same goes for Despicable Me and its sequel. A point of comparison in part one is the Gru talking to his neighbor Fred, showing more vibrant yellows in his shirt against a luminous blue sky and lush green grass. In part two, when the two men are playing cards, the barrel on which they're playing and the container behind them glows a more accurate orange while the whites of the snow and the large number on the side sparkle a little brighter. And in either movie, Kyle's teal-colored hairs appear more intense and insect-like. Amazingly, the improved, deeper black levels make possible for even more visible details in the individual LEGO blocks, Batman's costume, Gru's jacket and Dr. Nefario's lab, giving each 4K presentation a jaw-dropping realism while providing the overall picture with an incredible three-dimensional depth. But what about live action movies?

I can already hear some dissenters chanting, "That's all well and good, but how does Dolby Vision make my favorite live-action movie any better?" Well, going back to VUDU, in something like Unforgiven or Mad Max: Fury Road where the stylized photography shows a more restrained palette, the advantage is not so much in the colors, though flesh tones in MM:FR appear more natural and less reddish than the UHD, but in the contrast and brightness levels. In Clint Eastwood's award-winning western, there is more noticeable detailing in the fluffy clouds against a bright blue sky whereas the HDR10 makes them seem mistier. In George Miller's dystopian actioner, the faded, cracked white paint of War Boys is more realistic while individual grains of sand and rust spots of the War Rig are more visible.

When combined with brightly colorful films, such as Pan or Pacific Rim, the Dolby Vision version easily wins that contest, again with better detailing in the specular highlights and against the blackest blacks, especially in the case of Guillermo del Toro's anime-inspired sci-fi fantasy. What really took me by surprise is also seeing this sort of improvement when comparing Power Rangers. The opening sequence with the Red Ranger crawling on the floor revealing more details in the dark distance while maintaining excellent contrast throughout. As Zordon inches closer to the camera, we can better make out what I assume are blue veins around his face and shoulders while the rest of his body comes with a bluish gray tone. Later on, the colorful Ranger outfits are absolutely brilliant and glowing while maintaining extraordinary clarity of the black fine lines in the design. When the kids stand around the Morphing Grid to finally morph, the intensely bright light doesn't wash out the individual wrinkles and creases in their clothes while the rest of the room remains covered in dark shadows.

In the end, as much as I enjoy the current slate of 4K UHD Blu-ray titles, I am slowly coming to the conclusion that HDR10 sucks. Its limitations will soon fail to keep up with the future of television (which is why there have already been announcements about HDR10+ -- the + adds dynamic metadata -- for Samsung TVs and Amazon streaming content). Dolby Vision, on the other hand, is ready for that demand and already showing its advantages.

Bringing you all the best reviews of high definition entertainment.

Founded in April 2006, High-Def Digest is the ultimate guide for High-Def enthusiasts who demand only the best that money can buy. Updated daily and in real-time, we track all high-def disc news and release dates, and review the latest disc titles.

Copyright © 2024 LLC, MH Sub I, LLC dba Internet Brands. All rights reserved.